For example, a new study by Christopher J.L. Murray at the University of Washington models hospital and ICU utilization and deaths over a 4 month period of mitigations, and estimates that “Total deaths” can be kept under 100,000.

A similar story is told by a recent model developed by a group of researchers and publicized by Nicholas Kristof of the New York Times. Their basic message? Social distancing for 2 months instead of 2 weeks could dramatically drop the number of COVID-19 infections:

The same narrative appears in recent study in the Lancet, whose authors modeled the effects of mitigations continuing in Wuhan through the beginning of March or the beginning of April. In their findings, the authors write that continuing mitigations until the beginning of April instead of the beginning of March “reduced the median number of infections by more than 92% (IQR 66–97) and 24% (13–90) in mid-2020 and end-2020, respectively.”.

Hiding infections in the future is not the same as avoiding them

A keen figure-reader will notice something peculiar in Kristof’s figure. At the tail end of his “Social distancing for 2 months” scenario, there is an intriguing rise in the number of infections (could it be exponential?), right before the figure ends. That’s because of an inevitable feature of realistic models of epidemics; once transmission rates return to normal, the epidemic will proceed largely as it would have without mitigations, unless a significant fraction of the population is immune (either because they have recovered from the infection or because an effective vaccine has been developed), or the infectious agent has been completely eliminated, without risk of reintroduction. In the case of the model presented in Kristof’s article, assumptions about seasonality of the virus combined with the longer mitigation period simply push the epidemic outside the window they consider.

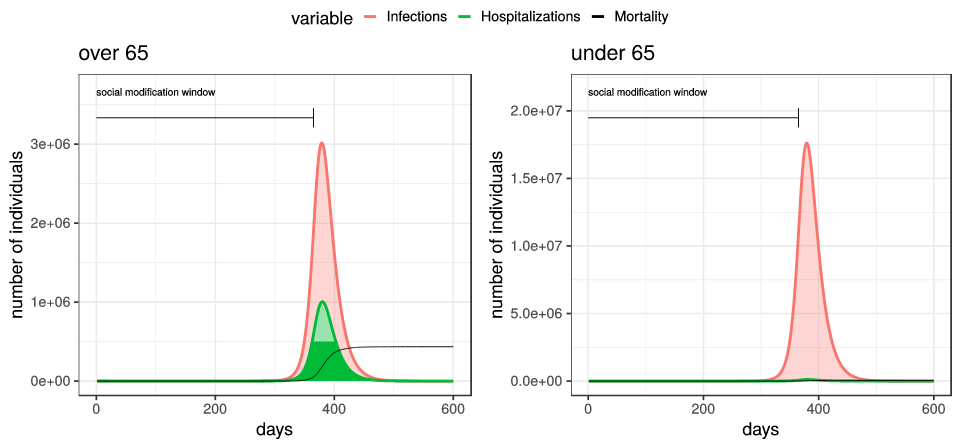

For example, in our work studying the possible effects of heterogeneous measures, we presented examples of epidemic trajectories for COVID-19 assuming no mitigations at all, or assuming extreme mitigations which are gradually lifted at 6 months, to resume normal levels at 1 year.

Unfortunately, extreme mitigation efforts which end (even gradually) reduce the number of deaths only by 1% or so; as the mitigation efforts let up, we still see a full-scale epidemic, since almost none of the population has developed immunity to the virus.

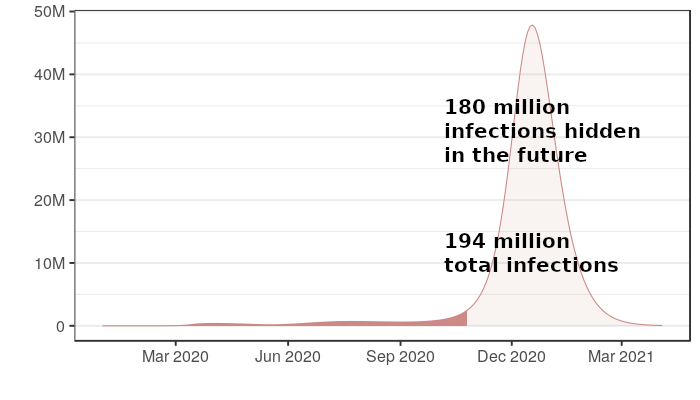

In the case of Kristof’s article, the epidemic model being employed is actually implemented in Javascript, and run — live — in a users web browser. This means that it is actually possible to hack their model to run past the end of October. In particular, we can look into the future, and see what happens in their model after October, assuming mitigations continue for 2 months. In particular, instead of the right-hand figure here:

The truth for their Social distancing for 2 months scenario is this:

Two months of mitigations have not improved the outcome of the epidemic in this model, it has just delayed its terrible effects. In fact, because of the role of weather in the model presented in the Kristof article, two months of mitigations actually results in 50% more infections and deaths than two weeks of mitigations, since it pushes the peak of the epidemic to the winter instead of the summer, whose warmer months this model assumes causes lower transmission rates.

The same thing plays out in other papers modeling a low number of infections or deaths from short-term suppression efforts. For example, Murray’s paper models 4 months of mitigations, but only models the epidemic over a 4 month period, ending in July. He concludes that less than 100,000 people will die in his model. But what happens in August? He obtains improvement in death rates in his model precisely because a small minority of the population becomes infected in his mitigation window. (In fact, because his approach is based on fitting a model to current data, it is unable to model a world in which transmission levels have returned to normal.) In fact, as soon as transmission levels increase, a large epidemic will follow, which he would detect if he did model the epidemic past 4 months. Similarly, in the Lancet study modeling mitigations in Wuhan, the only effect of delaying the end of mitigations is to delay the epidemic; infections are “reduced” in “mid-2020” and “end-2020”, but increased at later time-points.

For two months of containment to be better than two weeks of containment, the situation on the ground has to change

There is a simple truth behind the problems with these modeling conclusions. The duration of containment efforts does not matter, if transmission rates return to normal when they end, and mortality rates have not improved. This is simply because as long as a large majority of the population remains uninfected, lifting containment measures will lead to an epidemic almost as large as would happen without having mitigations in place at all.

This is not to say that there are not good reasons to use mitigations as a delay tactic. For example, we may hope to use the months we buy with containment measures to improve hospital capacity, in the hopes of achieving a reduction in the mortality rate. We might even wish to use these months just to consider our options as a society and formulate a strategy. But mitigations themselves are not saving lives in these scenarios; instead, it is what we do with the time that gives us an opportunity to improve the outcome of the epidemic.

What makes an honest model?

There can be value in modeling short-term effects of mitigations. For example, Murray’s study of ICU utilization over the next 4 months may have obvious relevance for planning in the short term — and his paper is clear that his model only models deaths over a 4 month period. But we take issue with models which study the effects of mitigations over a limited time-frame, when most of the impact of the epidemic would occur outside of that time-frame.

We should say that all the papers we quote here are clear about what they model, and none claimed explicitly to model the number of infections or deaths that would happen over the entire course of the epidemic. If one reads all of Kristof’s column, an honest disclaimer is eventually encountered:

A skeptic will note that these measures don’t seem to prevent a surge in infections so much as delay them (in some cases so that the impact is pushed beyond the period that this model tracks).

But by this point, after figures with “total infections” labeled in bold have been tweeted to millions of followers, the model has already played its role in misleading the public. Moreover, the fixed time window they choose for their model — one of the only parameters of the model a user cannot tinker with in the app on Kristof’s column — means that users can’t discover this basic truth for themselves.

In particular, we suggest that no model whose purpose is to study the overall benefits of mitigations should end at a time-point before a steady-state is reached. This is not the same as saying that modelers must assume that the epidemic remains a threat until herd immunity is reached. Indeed, it is perfectly reasonable to model the effects of mitigation strategies if we assume that a vaccine will be available in 18 months, or that mortality 6 months from now could be reduced by new treatments, or that hospital capacity might be increased with the time bought by mitigations. But these are all assumptions that can and should be made explicit and quantitative in a model that attempts to estimate effects on overall mortality. Without making assumptions explicit, it is impossible to debate whether they are reasonable, or to estimate the sensitivity of the model’s conclusions.

Where we are now

Nations around the world are staring down a host of terrible options. Business-as-usual means overrun hospitals, and large numbers of preventable deaths. One or two years of suppression measures in wait for a vaccine means a global shutdown whose full ramifications will require input from experts across multiple domains to fully understand. The viability of middle roads, which might attempt to replace suppression efforts with contact tracing while allowing normal social and economic activity, is still debated by experts.

What should be absolutely clear is that hard decisions lie ahead, and that there are no easy answers. The team at Imperial, which recently released a new study currently serving as the basis for the U.K’s new efforts at containment, summarize it this way:

It is important to note that we do not quantify the wider societal and economic impact of such intensive suppression approaches; these are likely to be substantial. Nor do we quantify the potentially different societal and economic impact of mitigation strategies. Moreover, for countries lacking the infrastructure capable of implementing technology-led suppression maintenance strategies such as those currently being pursued in Asia, and in the absence of a vaccine or other effective therapy (as well as the possibility of resurgence), careful thought will need to be given to pursuing such strategies in order to avoid a high risk of future health system failure once suppression measures are lifted.

Regardless of which strategies various governments will eventually turn to in the fight against COVID-19, their success will hinge in large part on the cooperation of the public — maintaining effective suppression on a timescale of years, for example, would require extraordinary levels compliance from citizens. The public should not be misled by presenting false stories of hope to motivate behavior in the short-term. Public health depends on public trust. If we claim now that our models show that 2 months of mitigations will cut deaths by 90%, why will anyone believe us 2 months from now when the story has to change?